Features of the swallowing image

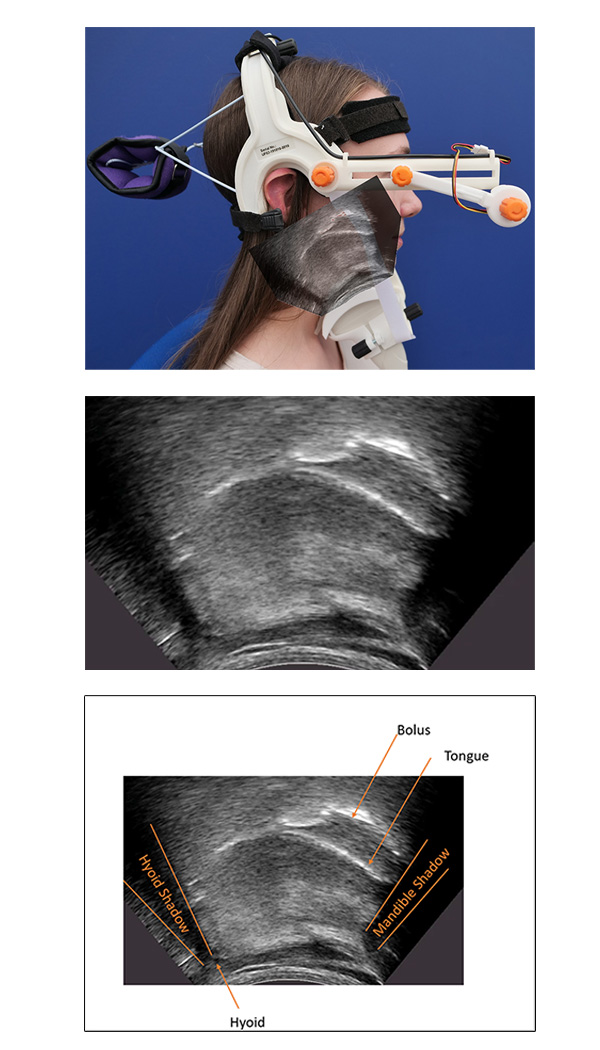

With the ultrasound probe placed midsagittally under the chin, it can be angled to capture a lateral image of the vocal tract that includes the mandible anteriorly, the hard palate superiorly and the hyoid posteriorly. The image below shows a typical view of the USES image, with the key structures labelled in the following figure of the same image. The key structures include:

Mandible - The inferior posterior surface of the mandible between the superior and inferior mental spines is identified by a line at the base of the mandible shadow.

Hyoid - A bright reflection is also often visible where the ultrasound beam encounters the hyoid, highlighting the anterior–inferior surface of the hyoid.

Mandible & hyoid shadows - The mandible and the hyoid both have a significant midsagittal cross-section, which absorbs ultrasound energy and generates a shadow beyond the leading edge of the mandible or the hyoid from the perspective of the probe.

Tongue – Ultrasound reflects from the tissue-to-air boundary and forms a bright edge in the image outlining the surface of the tongue. However, the presence of a water bolus in the oral cavity allows ultrasound to penetrate beyond the tongue surface reflecting off the tongue-bolus, bolus-to-air and bolus to palate boundaries.

As a result, identifying the tongue surface during a swallowing assessment can occasionally be challenging.

What can be measured automatically

Hyoid and mandible

We have adopted a machine learning approach in order to automatically estimate hyoid & mandible positions. Our current method uses pose estimation to locate 11 points along the midsagittal tongue surface, one point at the centre of the base of the hyoid shadow, and two points on the mandible corresponding to the superior and inferior mental spines. Pose estimation does not use temporal information to track the tongue. This has the advantage of being more robust to momentary loss of probe contact. The estimated positions can have small errors but post-processing can use temporal information to smooth out these errors prior to measures being taken. The automatic process requires no manual intervention. The estimation function will run on live input from the ultrasound machine to provide instant estimates of positions at up to 40 frames per second. It can also process stored recordings. The pose estimation network is trained on a mixture of speech and swallowing images recorded from a range of different models of ultrasound, different probe geometries and different people. Currently it learns from approximately 2000 hand-labelled frames.

Challenges with estimating tongue position

Although estimating hyoid and mandible positions is quite robust, estimating the tongue position during a swallow is more challenging. The surface of a liquid bolus appears in an ultrasound image as a bright reflective surface that can be easily confused with the tongue surface. This is true even for human labellers. The hard and soft palate can also provide a plausible bright edge that can be confused with the tongue surface. In addition, the hyoid shadow moves anteriorly during a swallow, hiding a significant part of the tongue surface. In the example videos you will see that the estimated tongue position is not always accurate. Additional training data, better image quality or an alternative machine learning approach may improve the estimation of tongue position and this is one focus of ongoing work.

More information on the pose estimation approach used can be found by clicking the button below.

Going forward

Once the positions of these anatomical structures are recorded, the challenge is to automatically extract measures that have relevance in assessing swallowing function. This is our current technical research focus.